This is one of the strangest psychological phenomenon’s I’ve come across…

In a classic study[1], participants watched a wheel of fortune spin and were then asked to estimate the number of African countries in the United Nations. The wheel was rigged to land on certain numbers. In one group, the wheel stopped at the number 10, and the other group, 65.

The first group estimated an average of 25 countries in the UN. The second group estimated 45 – that’s an 80% increase. The obvious question arises, why would a wheel of fortune number affect people’s estimation?

The answer, the psychological phenomenon known as ‘anchoring’. Anchoring is a surprisingly simple idea: we are influenced by numerical reference points, even if they are utterly irrelevant. That’s it.

However, these trivial anchors can have a profound impact on those who are subject to judges rulings. For example, in one study, a journalist encountered the judges and asked them whether a prison sentencing would be higher or lower than a certain number. This number acted as an anchor and resulted in a 32% longer prison sentence. In another study, the plaintiff told the judges that a TV court show awarded a certain amount. The result: a 700% increase in compensation.

The purpose of this article is not to embarrass judges, it’s to bring awareness to this phenomenon and ultimately defeat it.

In the first section I provide a straight-to-the-point summary of the current research on the anchoring bias on judges. Secondly, I explain how the anchoring bias works. Thirdly, I present a mountain of evidence on the effects of the anchoring bias on judges. Fourthly, I examine the criticisms and lastly, I consider how a lawyer may induce and defend the anchoring bias.

Note, if you are the skeptical reader, skip straight down to the section entitled ‘evidence’ and read a few studies.

And now, I present to you, the anchoring bias.

I. Key Takeaways

1. The anchoring bias is where a person is influenced by numbers that act as a reference point. These numbers may be utterly irrelevant. For example, one study asked participants to name the last two digits of their social security number, then asked them to decide on the amount they would bid for a bottle of wine. The participants with the digits 00 to 19 (low anchor), on average stated they would bid $11.73 (low price) for the bottle of wine. The participants with the digits 80 to 99 (high anchor), on average stated they would bid $37.55 (high price). The mere act of bringing numbers to mind resulted in a 220.12% difference.[2]

2. A total of 1090 judges from USA, Canada, Germany and the Netherlands participated in the following studies and the anchoring bias crept-in via a number of ways:

a. A prosecutor’s absurd sentencing demand: One study resulted in a 27.68% longer prison sentence, another 50%, and another, 60%.

b. A journalist reporter’s sentencing question: Resulted in a 32% longer prison sentence.

c. An absurd motion to dismiss: The defendant filed a motion to dismiss which alleged that the plaintiff’s compensation award would not meet the minimum requirements of $75,000. This number acted as an anchor and resulted in a 29.38% reduction in compensation.

d. Sentencing in years vs months: Identical meanings but different expression of that meaning can result in the anchoring bias. For example, ‘2 years’ vs ‘24 months.’ Both numbers have an identical meaning, yet judges are unconsciously influenced greater by the 24 months, than the 2 years. One study resulted in a 43.3% decrease when sentenced in months.

e. The mention of a court TV show compensation award: Resulted in a 700% increase in compensation.

f. The order of cases: When 2 cases were presented one after the other, the first case acted as an anchor on the second case resulting in longer prison sentences. One study showed a 40% longer prison sentence, another study 46.43%, and another 442.86%.

g. Damages caps: The damages cap acted as an anchor on minor claims and ironically resulted in excessively high compensation. One study showed a 47.83% increase in compensation and another, 250%.

h. Unlawful information: In one study, judges were explicitly reminded that certain information must be ignored by law. The judges that were exposed to a past interest rate for a bankruptcy case gave a 22.39% higher interest rate ruling. In another study, judges were explicitly reminded to ignore the information learned in settlement negotiations at a pretrial settlement conference. This information resulted in a 172.28% increase in compensation.

3. Jurors were also affected by the anchoring bias. The bias manifested itself in various ways:

a. The plaintiff’s compensation requests: Firstly, the more the plaintiff requested, the more the jurors awarded, even at comically high requests, such as $1 billion. Secondly, the more money the plaintiff requested, the more the jurors thought the defendant was liable.

b. Probability of causation: The probability of causation acted as an anchor resulting in a 100% increase in compensation. Obviously, the probability of causation of harm should not determine compensation, the amount of harm determines compensation. The effect is analogous to sentencing a man to prison for 60 years because they’re 100% certain the man stole a paperclip.

4. It is difficult for a lawyer to prevent a judge from committing the anchoring bias. It seems that it’s easier to induce the bias, than to counter it.

5. To reduce oneself from committing the anchoring bias, a strategy is to consciously counter argue the number. Think of as many reasons as possible why the number is wrong. This aids in breaking the anchor’s hold.

II. Understanding the Anchoring Bias

The anchoring bias works both consciously and unconsciously. In both forms, the anchor acts as a reference point (the anchor) which keeps the mind nearby. The mind doesn’t seem to drift far from the reference point – hence the metaphor of a ship’s anchor. Below is a visual analogy.

Anchor, then Adjust

The first way anchoring works is through the concept known as ‘anchoring and adjustment.’[3] The mind jumps to the first anchor, then consciously adjusts from that point onwards.

This is not necessarily bad because an anchor can contain useful information.[4] For example, let’s say you are selling your house, one may first observe the surrounding houses as an indication for your own selling price.

The problem occurs when the mind does not adjust in its entirety. When the mind stops short, the anchoring bias has been committed.

Mere Suggestion

Secondly, the bias works through mere suggestion. The numerical suggestion throws the mind in a certain direction. For example:

…the question “Do you now feel a slight numbness in your left leg?” always prompts quite a few people to report that their left leg does indeed feel a little strange.[5]

That slight tingle you felt in your leg, that’s analogous to what’s happening to the mind.

Once the suggestion has been made, anchoring induces a person to find reasons why the decision is similar to the anchor. And the opposite occurs also – the anchor tends to block reasons for why the decision is different to the anchor.[6] Anchoring does not necessarily work at the extreme level, such as blocking all reasons, but it roughly works in this manner, enough to cause a bias.

An example of how this works is best explained in the classic ‘textbook price estimation’ study. The researchers asked students to estimate the average price of a textbook. But, half the participants were first asked whether the average price was higher of lower than $7,163.52. The students that were exposed to the ludicrous number estimated the average price to be higher than the students that were not exposed.

…the absurdly high anchor leads people to recall highly expensive books. When they recognize that even the most expensive textbooks are far below the absurd anchor, they can reject the hypothesis that the average book is truly that costly. But when they then estimate the actual average price, they are thinking about expensive books, which produces higher estimates.[7]

Thus, the mere suggestion of the number launched the students to reason in a certain direction which resulted in irrationally higher estimations.

Now, let’s see how this phenomenon plays out on judges.

III. The Evidence: Anchoring Bias on Judges

1. THE PROSECUTORS DEMAND STUDY [8]

In the following study, 39 judges were tested on the effects of prosecutors absurd sentencing demands. The judges were given a booklet containing a shoplifting case in which the accused had been caught stealing for the 12th time. They were asked to give their prison sentence after the prosecutor made their demand. But, here’s the twist, the judges were informed that the prosecutor's demand was entirely random. Therefore, the demand contained no reasonable information.

Results and Key Takeaways

The judges that were exposed to the low sentencing demand, gave a sentence of 4 months.

The judges that were exposed to the high demand, gave a sentence of 6 months.

This equals a 50% longer prison sentence.

Answers to Possible Objections

45% of the judges were women, the judges ranged from 29 to 61 year of age, and the average experience was 13 years. The more experienced judges were just as susceptible than the lessor experienced judges yet, the more experienced judges felt more confident in their decision.

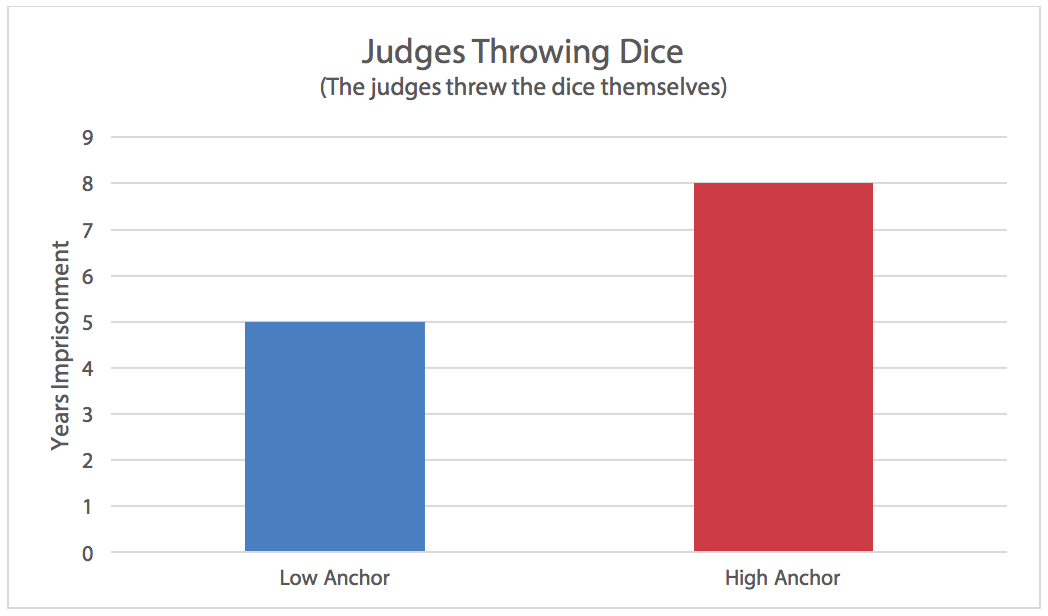

2. THE DICE STUDY [9]

The researchers in this study wanted to squash any doubts about the anchoring effect by designing the most absurd scenario as possible.

52 young judges were given a booklet containing a case of theft. The judges were informed of the prosecutor's sentencing demand, however, the specific number was left blank. And so, the judges were told to discover the sentencing demand by throwing a pair of dice. The dice was rigged so that it landed on a low number for one group and a high number for the other group.

Results and Key Takeaways

The judges who’s dice landed on the low number gave a sentence of 5 months.

The judges who’s dice landed on the high number gave a sentence of 8 months.

This equals a 60% longer prison sentence.

Weakness

The judges in this study were young and inexperienced. However, other studies have shown that experience did not reduce the anchoring effect.[10] Thus, this study still holds its validity.

How is This Study Relevant?

Even though judges typically do not throw dice before making sentencing decisions, they are still constantly exposed to potential sentences and anchors during sentencing decisions. The mass media, visitors to the court hearings, the private opinion of the judge’s partner, family, or neighbors are all possible sources of sentencing demands that should not influence a given sentencing decision.

Sentencing decisions may also be influenced by irrelevant anchors that simply happen to be uppermost in a judge’s mind when making a sentencing decision.

[Our research] suggests that irrelevant influences on sentencing decisions may be a widespread phenomenon.[11]

3. THE COMPUTER SCIENCE STUDY [12]

In this study, 16 German trial judges were given a rape case with a sentencing demand. The sentencing demand is normally given by a prosecutor but in this study, the demand was given by a first-year computer science student.

One group received a demand of 12 months (low anchor), and the other, 34 months (high anchor).

Results & Key Takeaways

1. The judges that received the student’s sentencing demand of 12 months, sentenced the criminal to 28 months in prison.

2. The judges that received the student’s sentencing demand of 34 months, sentenced the criminal to 35.75 months to prison.

3. This is an increase of 27.68%.

Answers to Possible Objections

15 of the 16 judges found that the computer science student’s demand was irrelevant to their decision. The judges had an average of 15.4 years of experience.

4. THE JOURNALIST’S PHONE CALL STUDY [13]

This study measured the effects of a journalist’s phone call which acted as an anchor.

23 judges and 19 prosecutors from Germany were presented with a booklet of information containing a realistic rape case. In the booklet, the following information was given:

During a court recess [you] receive a telephone call from a journalist who directly asks…, “Do you think that the sentence for the defendant in this case will be higher or lower than 1/3 year(s)?” (low/high anchor).

The judges were then asked to hand down a prison sentence.

Results and Key Takeaways

The judges who were exposed to the low anchor gave a prison sentence of 25 months.

The judges who were exposed to the high anchor gave a prison sentence of 33 months.

This equals a 32% longer prison sentence.

Answers to Possible Objections

The participants ranged from 27 to 61 years of age and there were equal numbers of men and women. The average experience of the judges was 10 years. The higher experienced judges were just as susceptible as the lessor experienced judges, yet the higher experienced judges felt more confident in their decision then the lessor experienced judges.

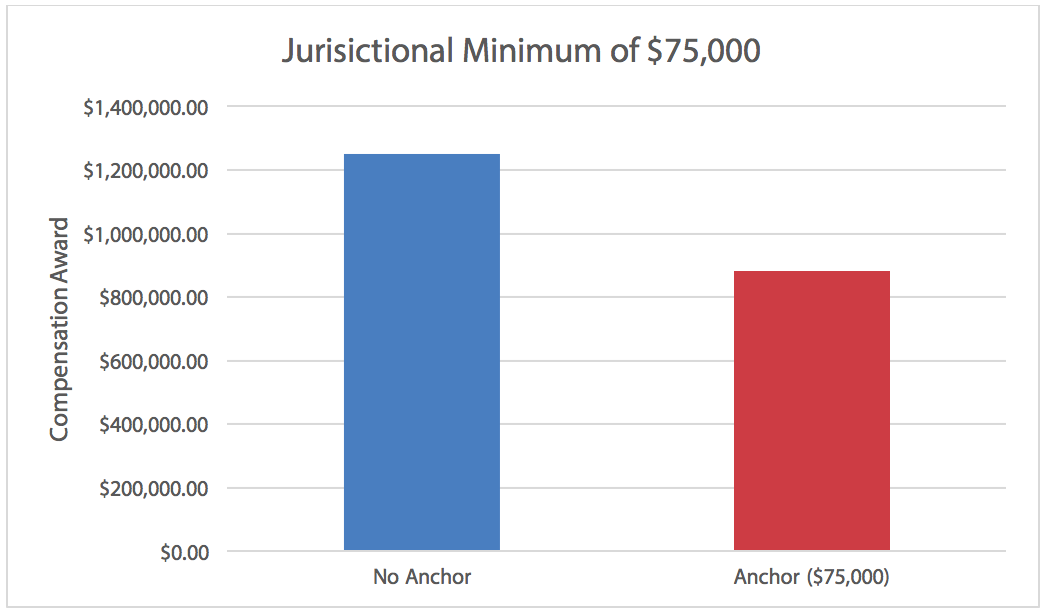

5. THE MOTION TO DISMISS STUDY [14]

The researchers in this study wanted to find out if a motion to dismiss could influence judges compensation awards. The number contained in the motion to dismiss would act as the anchor.

167 judges were given a personal injury scenario where the plaintiff was hit by a truck due the truck’s faulty brakes. Half of the judges were given additional information that the defendant moved for a motion to dismiss because the damages did not add up to the minimum of $75,000 (the anchor). 97.7% of judges denied the motion to dismiss because the $75,000 seen as comically low.

Results & Key Takeaways

The judges that did not receive the anchor awarded the plaintiff $1,249,000.

The judges that received the anchor of $75,000 awarded the plaintiff $882,000.

This equals a 29.38% reduction in compensation.

6. THE MONTHS VS YEARS STUDY [15]

135 judges were given a case of voluntary manslaughter. The accused stabbed to death a man who was having an affair with his fiancé.

Half the judges were asked to sentence the accused in years, and the other half were asked to sentence in months.

Results & Key Takeaways

The judges that were required to sentence in years, sentenced the criminal to 9.7 years in prison.

The judges that were required to sentence in months, sentenced the criminal to 5.5 years in prison.

This equals a 43.3% decrease.

Explanation: The sentence expressed in ‘months’ acted as an anchor which dragged the total sentence downwards. A simple illustration best explains this phenomenon: 12 months and 1 year are equivalent but the number 12 is larger than the number 1. Thus, the person becomes anchored on the number 12 and sentences a lower amount then they would have if they sentenced in years. The number 12 feels more extreme than the number 1.

Answers to Possible Objections

The judges had an average of 13 years of experience and 29% of judges were female. Males and females suffered from the anchoring bias equally.

7. THE COURT TV SHOW STUDY [16]

82 judges were given information about an employment discrimination case. Throughout the plaintiff’s employment, the defendant said offensive phrases such as “go back to Mexico.” Later, the defendant fired the plaintiff and the plaintiff sued.

Half of the judges were given additional information which stated that the plaintiff saw a TV court show and the show awarded the plaintiff $415,300. This number acted as the anchor.

Results & Key Takeaways

The judges that did not receive the mention of a TV show compensation (no anchor), awarded the plaintiff $6,250.

The judges that received the mention of a TV court show compensation (the anchor), awarded the plaintiff $50,000.

This equals an increase of 700%.

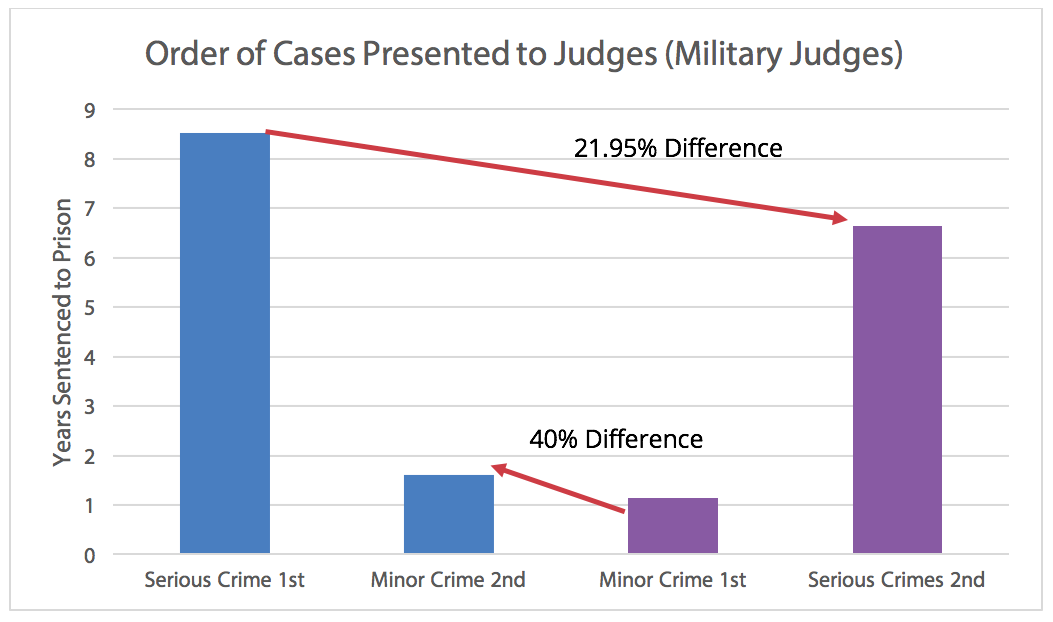

8. THE ORDER OF CASES STUDY [17]

A judge may hear a range of cases, some serious and some minor. The order in which the judge hears a case may affect the outcome of the subsequent case. For example, a judge that first rules on a manslaughter case, then rules on a threat of violence case, may become anchored on the manslaughter case, which may result in higher sentence on the threat of violence case.

And this is what the researchers attempted to find out.

This study was repeated 3 times. First, with 71 newly appointed military judges, second, with 39 Arizona Judges and last, with 62 Dutch judges.

Half the judges viewed the serious crime first (manslaughter), followed by the minor crime (threat of violence). And the other half viewed the minor crime first, followed by the serious crime.

Results & Key Takeaways

Military Judges:

When the serious crime was heard first, the sentence on the minor crime was longer by 40%.

When the minor crime was heard first, the sentence on the serious crime was shortened by 21.95%.

Arizona Judges:

When the serious crime was heard first, the minor crime was raised by 46.43%.

When the minor crime was heard first, the serious crime was unaltered. This means that the minor crime did not influence the serious crime.

Dutch Judges:

When the serious crime was heard first, the minor crime was raised by 442.86%.

When the minor crime was heard first, the serious crime was unaltered. (Note, we can visually see the difference between the ‘Serious Crimes’ as the first is at 6.46 and the second, 5.79. However, for statistical reasons, the difference was not valid.)

Answers to Possible Objections

24% of the military judges were female, 39% of the Arizona judges female, and 39% of the Dutch judges were female. The Arizona judges had an average of 11.5 years experience as a judge, and the Dutch judges an average of 13.2.

Conclusions

When the serious crime was heard first, this anchored the second minor crime which resulted in an increase in sentencing. The smallest increase was 40% and the largest increase was 442.86%.

When a minor crime was heard first, it did not consistently anchor the second serious crime.

9. THE DAMAGES CAP STUDY [18]

Can a damages cap act as an anchor?

115 Canadian and 65 New York trial judges were given an automobile accident and were asked to award damages for pain and suffering. Due to the plaintiff’s injuries caused by the car crash, he suffered from severe headaches and was unable to concentrate at work, nor was he able to play with his kids.

Half of the judges were told that the damages cap was $322,236 (Canadian cap) / $750,000 (New York cap) and the other half of the judges were told nothing.

Results & Key Takeaways

Canadian Judges:

The judges who were not informed of a damages cap awarded the plaintiff $57,500.

The judges who were informed of a damages cap awarded the plaintiff $85,000.

Therefore, the damages cap ironically resulted in an increase of 47.83%.

New York Judges:

The judges who were not informed of a damages cap awarded the plaintiff $100,000.

The judges who were informed of a damages cap awarded the plaintiff $250,000.

Therefore, the damages cap ironically resulted in an increase of 250%.

10. THE BANKRUPTCY STUDY [19]

In this study, 112 highly specialised bankruptcy judges were given the following scenario and asked to make a ruling.

A truck driver borrowed a few thousand dollars at a 21% interest rate from a small loans company. Later, the truck driver restructured the loan but they couldn’t agree on the new interest rate.

The bankruptcy judges were required to determine the interest rate.

Half of the judges were given the following sentence: “[t]he parties agree that… the original contract interest rate is irrelevant to the court's determination.” The other half were informed of the interest rate which was designed to act as an anchor. It read: “[t]he parties agree that… the original contract interest rate of 21% is irrelevant to the court's determination.” The researchers emphasised the importance of ignoring the 21% by giving the judges a precedent to read that stated judges must ignore the initial interest rate.

Results & Key Takeaways

The judges that were given no anchor, ruled an average of 6.52%.

The judges that were given an anchor, ruled an average of 7.98%.

This results in a 22.39% increase. Therefore, the judges could not ignore the anchor even under explicit instructions under law.

Answers to Possible Objections

These differences might seem small. The differences, however, are obviously meaningful to debtors struggling to crawl out of bankruptcy; a one point difference on a $10,000 loan can mean hundreds or even thousands of dollars over the life of the loan.

11. THE PRETRIAL SETTLEMENT CONFERENCE STUDY [20]

Can judges ignore information that is learned at a pretrial settlement conference?

265 judges were given a booklet of information on an automobile accident case. In the booklet, they were told to imagine that they previously attended a pretrial settlement conference and during the conference, the plaintiff’s compensation demands were rejected. One group were told the plaintiff demanded $10 million and the other group were not told the specific demand. The $10 million acted as the anchor.

The judges were told to make a ruling as if it was a real trial. The judges were also explicitly reminded that law the law requires them to not consider the negotiations at the pretrial settlement conference.

Results and Key Takeaways

The judges that did not receive the plaintiff’s demand, awarded the plaintiff $808,000.

The judges that received the plaintiff’s $10,000,000 demand, awarded the plaintiff $2,200,000.

This equals a 172.28% increase.

IV. The Evidence: Anchoring Bias on Jurors

1. THE BIRTH CONTROL PILL STUDY [21]

In this study, the researchers tested the effects of the anchoring bias on mock jurors. The first study measured the effect of a monetary anchor on the probability of causation. The second study measured effect of a monetary anchor on compensation awards.

56 mock jurors were given a booklet of information containing a personal injury case. In the case, the plaintiff argued that her birth control pill caused her ovarian cancer. The plaintiff was suing the Health Maintenance Organisation for prescribing her a cancer-causing pill.

The information for all participants was identical except for the amount of damages the plaintiff requested. The researchers asked the mock jurors a series of questions.

Results & Key Takeaways

On a scale of 1% – 100%, the mock jurors were asked to decide whether the defendant caused the plaintiff's injury.

The jurors that received the low anchor judged a 26.4% probability that defendant caused the plaintiff's jury.

The jurors that received the high anchor judged a 43.9% probability that the defendant caused the plaintiff's injury.

Therefore, the higher the anchor, the higher the perceived probability of causality.

Next, jurors were asked to judge how much money the plaintiff deserved. The only factors that changed between jurors was the amount of money that the plaintiff requested.

The group that received a $100 request awarded the plaintiff $992.27.

The group that received a $20,000 request awarded the plaintiff $36,315.50.

The group that received a $5 million request awarded the plaintiff $442,413.39.

The group that received a $1 billion request awarded the plaintiff $488,942.41.

Therefore, the higher request, the higher the jurors awarded the plaintiff.

Answers to Possible Objections

Firstly, the amount of money that the plaintiff requested did not influence the jurors’ perceptions of the defendants suffering. That is, extreme money request did not trigger the jurors mind the perceive that the plaintiff suffered more.

Secondly, the jurors reasoned with full knowledge that the plaintiff’s request was absurd.

Thirdly, the jurors felt that the plaintiffs who requested high compensation were more selfish and less generous, yet, paradoxically they awarded the same people higher compensation.

2. THE 2ND BIRTH CONTROL PILL STUDY [22]

Before I proceed with this study, I will start with a simple analogy as it best explains this bizarre phenomenon.

Imagine a group of jurors deciding upon a case of theft but the evidence is extremely clear. There’s CCTV footage of the theft, there’s several witnesses, and the thief himself even admitted his crime. Then, the jurors being so convinced of the thief’s guilt that they sentence him to 60 years in prison. But, here’s the twist: he stole a paperclip.

Well, this exaggerated illustration is what happened in the study below. A person’s punishment or compensation is not related to how guilty defendant is. It’s related to the seriousness of the crime or the level of the plaintiff’s suffering.

Thus, this study measured the effect of the probability of causation (anchor) on the jurors compensation awards.

162 mock jurors were given a booklet of information containing a personal injury case. The plaintiff argued that her birth control pill caused her ovarian cancer. The plaintiff is suing the Health Maintenance Organisation for prescribing her a cancer-causing pill. The information for all participants was identical except for the probability of causation. That is, did the pill cause the cancer? One group received evidence showing a 90% probability of causation and the other group received evidence showing a 10% probability of causation.

Results and Key Takeaways

Participants that received the low anchor (10% probability of causation) awarded the defendant $300,000.

Participants that received the high anchor (90% probability of causation) awarded the defendant $600,000.

This equals a 100% increase in compensation.

The higher compensation awards were probably due to the negative feeling towards the defendant. The ‘90% probability group’ had strong levels of negative feelings toward the defendant and so, they probably searched for reasons why the defendant deserved to punished by awarding higher compensation to the plaintiff.

Answers to Possible Objections

The $300,000 and $600,000 awards were not correlated with the jurors perception of the plaintiff’s suffering. That is, the ‘90% probability’ group did not award the plaintiff $600,000 because they thought that the plaintiff suffered more.

V. Criticisms of Anchoring

The biggest criticism against the anchoring bias is that the anchor itself may have contained some useful information. For example, in ‘The TV Court Show Study’ (see above):

The judges who saw the reference to $415,300… could have interpreted this as an indication that the case was much more serious than the facts suggested.[23]

This kind of explanation occurs for virtually all studies. The researchers continually invent a reason for why the anchor is not truly an anchor.

The second criticism is that the participants didn’t take the absurd studies seriously. For example, in ‘The Dice Study’ (see above):

…using dice as a reference point is obviously strange and might have undermined the judges' willingness to take the study seriously. It is hard to imagine what the judges thought that the point of the dice roll was in this experiment.

However, I don’t buy either of these criticisms. One can construct a conspiracy for all studies. For example, maybe the dice in the ‘dice rolling study’ was not random at all. Maybe the government was trying to send the judges a secret message through the dice… Or, maybe the judges lied and thought the computer science student (see study above) was a hidden genius who had been who had been reading law textbooks since the age of 6. Researchers could experiment for the next 50 years and design more and more sophisticated studies, but, I still think a reason can be invented for why the anchor contained some secret information.

It is true that anchors can contain information but at some point, denial becomes wacky. There is an overload of evidence over the last 40 years coming from many fields all pointing in the same direction: we are affected by numerical reference points.

AUTHOR’S OPINION

I answer the following two questions: 1) Will the anchoring bias affect the outcome of a court case? 2) If the anchoring bias occurred in a court case, will we know it?

Will the anchoring bias affect the outcome of a court case?

1) Firstly, a strong criticism against the anchoring bias is that real court-cases contain more information than the studies provide. Some argue that the additional information may ‘mute’ the effects of anchoring.[24]

However, a study on real-estate agents provides some counter-evidence:

In an experiment conducted some years ago, real-estate agents were given an opportunity to assess the value of a house that was actually on the market. They visited the house and studied a comprehensive booklet of information that included an asking price. Half the agents saw an asking price that was substantially higher than the listed price of the house; the other half saw an asking price that was substantially lower. Each agent gave her opinion about a reasonable buying price for the house and the lowest price at which she would agree to sell the house if she owned it. The agents were then asked about the factors that had affected their judgment.

Remarkably, the asking price was not one of these factors; the agents took pride in their ability to ignore it. They insisted that the listing price had no effect on their responses, but they were wrong: the anchoring effect was 41%. Indeed, the professionals were almost as susceptible to anchoring effects as business school students with no real-estate experience, whose anchoring index was 48%.[25]

There are two key factors that stood-out in this study. i) The real-estate agents physically visited the house, which means the study extended beyond a mere written description. This means, the anchoring bias infiltrated further then the clinical setting, which may possibly extend into the courtroom. ii) Next, a key factor in this study is that the booklet of information was comprehensive. The additional information provided in this study did not ‘mute’ the effects of the anchoring bias.

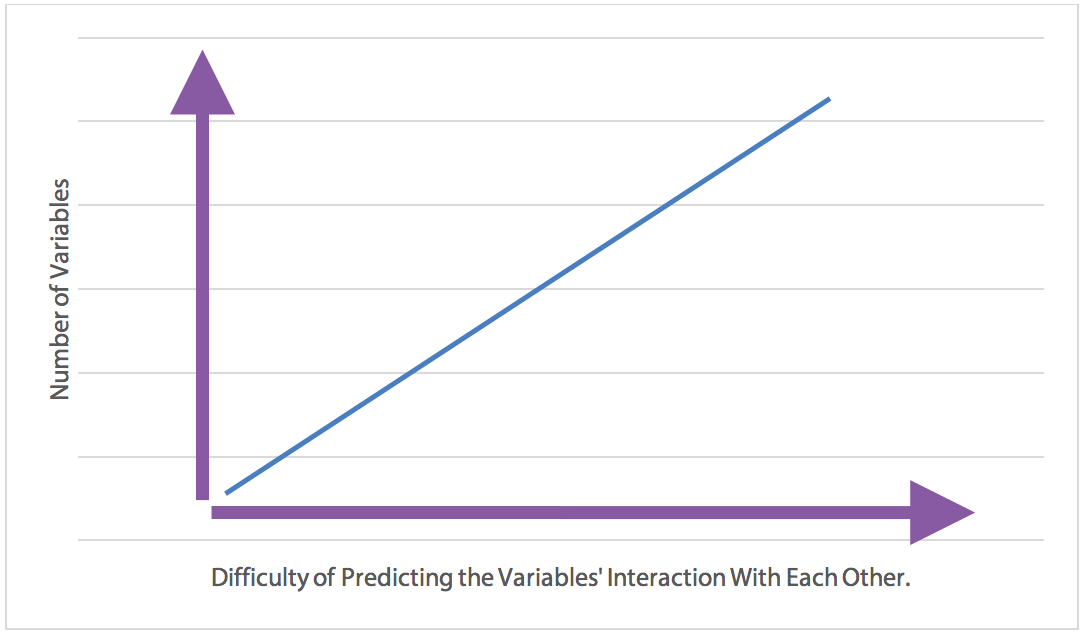

2) Secondly, a criticism I have against the anchoring bias, and all biases, is the following:

As the number of variables increase, the harder it is to predict the interaction of these variables. This is illustrated by the graph below.

While I do not doubt the existence of anchoring bias, I do doubt whether we can predict whether it will occur in a court case due the sheer number of variables that may interact with each other.

For example, studies have shown that judges are affected by: time of day;[26] physical attractiveness of the defendant;[27] baby-faced defendants;[28] the age of the defendant;[29] framing;[30] egocentric bias;[31] hindsight bias;[32] representative bias;[33] and so on.

Even if the judge explicitly states his/her reasoning, I think one can still hold their reasons with some skepticism. The 7th circuit US judge Richard Posner argues that it can be hard to know what the judge’s real reasons for his or her decision.[34] Posner asserts that judges may say one thing, but really, they mean another. This may be due to political correctness; conforming to the court to obtain a promotion; posthumous fame; ideological propensity; the judgment was written by clerks; and so on.

Furthermore, the book The Legal Analyst [35] provides a smorgasbord of ways a judge may decide a case. Such as: ex ante; ex post; deterrence;[36] Kaldor-hicks efficiency; thinking at the margin; and many others. The author also emphases that the judge may not explicitly state these reasons for their decision.

And, finally, the philosopher Holmes identifies this over 100 years ago:

The life of the law has not been logic; it has been experience. The felt necessities of the time, the prevalent moral and political theories, intuitions of public policy, avowed or unconscious, even the prejudices which judges share with their fellow-men, have had a good deal more to do than the syllogism in determining the rules by-which men should be governed.[37]

These variables demonstrate the sheer number of influences that can affect the outcome of a court case. And so, what would happen if the defense used the anchoring bias and simultaneously, the defendant was unattractive? Or, the case was a ‘no brainer’ from a legal perspective, but the defense used every trick under the sun? Would the variables cancel each other out or interact in an unknown way?

If the anchoring bias occurred in a court case, will we know it?

Here, the classic statistical concept of ‘correlation does not mean causation’ applies. If the outcome of a court case was lower or higher than expected, we will probably not know what the true cause was. This is due to the enormous range of variables that may apply, as demonstrated under the previous question. Thus, as the number of variables increase, the harder it is to infer the causal factor/s. Observe the illustration below.

The number of variables dramatically increases the uncertainty in identifying the true cause. If the anchoring bias occurred in a court case, we probably won’t know it. Was the increase due to the anchoring bias, or due to deterrence, or due to the time of day, or the egocentric bias, or unattractiveness, etc., etc.?

CONCLUSION

I therefore conclude the following:

If the number of variables are high, then I speculate that it’s near impossible to know whether it will occur in an actual court case.

If the numbers of variables are high, then I speculate that it’s near impossible to know whether it has affected a court case.

This is not to say that it will not occur, it is to say that we just don’t know.

However, as the evidence is overwhelmingly in favour of the anchoring bias, I assert that it is safer to defend it, than to let the bias creep through.

VI. Inducing and Defending the Anchoring Bias

The following section is on the ways a lawyer may potentially exploit the anchoring bias and ways to potentially defend it. The purpose here is not to endorse dirty tricks, it’s to recognise when and where the anchoring bias may arise so that one can counter it.

1. INDUCING THE BIAS

Rapid Decision Making

Judges, like other decision makers, are most likely to rely on cognitive shortcuts, such as anchoring, when they face time constraints that force them to process complex information.[38]

A judge that is inundated with cases will be more prone to cognitive biases.[39] They may not have the luxury of carefully going through each case with a fine-tooth comb but this is where the threat of exploitation lies. Judges themselves have admitted that their busyness leads to ‘less-than-optimal decision making.’[40]

Inserting Irrelevant Anchors

All studies in this article contribute towards the evidence that humans are prone to influence by irrelevant anchors. Thus, if lawyers slip in a few random reference points here-and-there, judges may be unconsciously influenced.

2. DEFENSES

I must admit, finding defenses to the anchoring bias was difficult. Studies have attempted to reduce the anchoring bias but have failed.[41] There have been many suggestions on reforming the legal system, but, until the legal system is reformed, they have little use for lawyers. Some of these suggestions include:

…attempting to train judges to avoid the impact of anchoring, prohibiting litigants from mentioning numbers that might operate as anchors (such as a damage cap or plaintiffs ad damnum), separating decision-making functions, requiring explanations for the amount of damages awarded or the sentence imposed, relying on aggregated data, and cabining discretion with sentencing guidelines and damage schedules.[42]

Nonetheless, one way to potentially reduce the anchoring effect on sentencing is to place a heavy emphasis on past decisions. Judges intentionally look for reference points[43] and in one study, the judges became frustrated because the researchers intentionally did not provide one.[44] It seems that judges both consciously and unconsciously look for an anchor, thus past decisions may fill this role.

On an individual level, the best suggestion I’ve found to fight the anchoring bias is to find as many reasons as possible why the anchor is wrong. Consciously counter argue and debunk the number as this may break mind’s the natural gravitation towards the reference point.

3. FAILED DEFENSES

The following is a list of factors, methods and strategies that do not work or have little effectiveness.

A Judge’s experience: Experienced judges are equally susceptible to trivial anchors as unexperienced judges.[45] One study showed that experienced judges were equivalent to newly admitted lawyers.[46] Another study showed that experienced judges were equivalent to law students.[47] There was one key difference between the experienced and inexperienced judges, the experienced judges were more certain of their judgments.

Relying on specialised judges to be immune from anchoring: Specialist judges from the US were immune other biases, such as ‘omission bias, a debtor's race, a debtor's apology, and "terror management" or "mortality salience’”[48], but not immune from anchoring.

Expecting the judge to ignore irrelevant information: All studies in this article provide counter evidence.

Expecting the judge to ignore irrelevant information, even when they must ignore it by law: In the study Inside the Bankruptcy Judges Mind,[49] the judges were explicitly informed that the law states they were to ignore a percentage rate and must not include it in their decision. This explicit instruction had no effect as the judges accidentally anchored on the percentage rate. The same thing happened in another study.[50] The judges were explicitly reminded that the number cannot be taken into consideration by law. However, they still anchored on the number. Therefore, explicit instructions, even by law, will likely not reduce the anchoring bias.

End Notes

[1] Judgment Under Uncertainty: Heuristics and Biases (1974) by Daniel Kahneman & Amos Tversky

[2] "Coherent Arbitrariness": Stable Demand Curves without Stable Preferences (2003) by Dan Ariely, George Loewenstein and Drazen Prelec

[3] Judgment Under Uncertainty: Heuristics and Biases (1974) by Daniel Kahneman & Amos Tversky

[4] Thinking Fast and Slow (2011) by Daniel Kahneman

[5] Thinking Fast and Slow (2011) by Daniel Kahneman [121]

[6] The Limits of Anchoring (1994) by G. B. Chapman & E. J. Johnson

[7] Can Judges Make Reliable Numeric Judgments: Distorted Damages and Skewed Sentences (2015) by Jeffrey J. Rachlinski, Andrew J. Wistrich, & Chris Guthrie

[8] Playing Dice With Criminal Sentences: The Influence of Irrelevant Anchors on Experts’ Judicial Decision Making (2006) by Birte Englich, Thomas Mussweiler, & Fritz Strack

[9] Playing Dice With Criminal Sentences: The Influence of Irrelevant Anchors on Experts’ Judicial Decision Making (2006) by Birte Englich, Thomas Mussweiler, & Fritz Strack

[10] Playing Dice With Criminal Sentences: The Influence of Irrelevant Anchors on Experts’ Judicial Decision Making (2006) by Birte Englich, Thomas Mussweiler, & Fritz Strack; Experts, Amateurs, and Real Estate: An Anchoring-and-Adjustment Perspective on Property Pricing Decisions (1987) by Gregory B. Northcraft & Margaret A. Neale

[11] Playing Dice With Criminal Sentences: The Influence of Irrelevant Anchors on Experts’ Judicial Decision Making (2006) by Birte Englich, Thomas Mussweiler, & Fritz Strack

[12] Sentencing Under Uncertainty: Anchoring Effects in the Courtroom (2001) by Birte Englich & Thomas Mussweiler

[13] Playing Dice With Criminal Sentences: The Influence of Irrelevant Anchors on Experts’ Judicial Decision Making (2006) by Birte Englich, Thomas Mussweiler, & Fritz Strack

[14] Inside the Judicial Mind (2001) by Chris Guthrie, Jeffrey J. Rachlinski, & Andrew J. Wistrich

[15] Can Judges Make Reliable Numeric Judgments: Distorted Damages and Skewed Sentences (2015) by Jeffrey J. Rachlinski, Andrew J. Wistrich, & Chris Guthrie

[16] The Hidden Judiciary: An Empirical Examination of Executive Branch Justice (2009) by Chris Guthrie, Jeffrey J. Rachlinski, & Andrew J. Wistrich

[17] Can Judges Make Reliable Numeric Judgments: Distorted Damages and Skewed Sentences (2015) by Jeffrey J. Rachlinski, Andrew J. Wistrich, & Chris Guthrie

[18] Can Judges Make Reliable Numeric Judgments: Distorted Damages and Skewed Sentences (2015) by Jeffrey J. Rachlinski, Andrew J. Wistrich, & Chris Guthrie

[19] Inside the Bankruptcy Judge’s Mind (2006) by Jeffrey J. Rachlinski, Chris Guthrie, & Andrew J. Wistrich

[20] Can Judges Ignore Inadmissible Information? The Difficulty of Deliberately Disregarding (2005) Andrew J. Wistrich, Chris Guthrie, & Jeffrey J. Rachlinski

[21] The More You Ask for, the More You Get: Anchoring in Personal Injury Verdicts (1996) by Gretchen B. Chapman & Brian H. Bornstein

[22] The More You Ask for, the More You Get: Anchoring in Personal Injury Verdicts (1996) by Gretchen B. Chapman & Brian H. Bornstein

[23] Can Judges Make Reliable Numeric Judgments: Distorted Damages and Skewed Sentences (2015) by Jeffrey J. Rachlinski, Andrew J. Wistrich, & Chris Guthrie

[24] Can Judges Ignore Inadmissible Information? The Difficulty of Deliberately Disregarding (2005) Andrew J. Wistrich, Chris Guthrie, & Jeffrey J. Rachlinski

[25] Thinking Fast and Slow (2011) by Daniel Kahneman

[26] Extraneous factors in judicial decisions (2011) by Shai Danziger, Jonathan Levav, and Liora Avnaim-Pesso

[27] http://www.thelawproject.com.au/blog/attractiveness-bias-in-the-legal-system; Natural Observations of the Links Between Attractiveness and Initial Legal Judgments (1991) by A. Chris Downs and Phillip M. Lyons

[28] The Impact of Litigants' Baby-Facedness and Attractiveness on Adjudications in Small Claims Courts (1991) by Leslie A. Zebrowitz and Susan M. McDonald

[29] What's In A Face? Facial Maturity And The Attribution Of Legal Responsibility (1988) by Diane S. Berry

[30] Inside the Judicial Mind (2001) by Chris Guthrie, Jeffrey J. Rachlinski, & Andrew J. Wistrich

[31] Inside the Judicial Mind (2001) by Chris Guthrie, Jeffrey J. Rachlinski, & Andrew J. Wistrich

[32] Inside the Judicial Mind (2001) by Chris Guthrie, Jeffrey J. Rachlinski, & Andrew J. Wistrich

[33] Inside the Judicial Mind (2001) by Chris Guthrie, Jeffrey J. Rachlinski, & Andrew J. Wistrich

[34] Richard Posner, Empirical Legal Studies Conference keynote held by University of Chicago Law School: https://youtu.be/18i5yUNJq30

[35] The Legal Analyst: A Toolkit for Thinking About the Law (2007) by Ward Farnsworth

[36] http://www.abc.net.au/news/2016-12-16/nurofen-fined-6m-for-misleading-consumer/8126450

[37] The Common Law (1881) by Oliver Wendell Holmes

[38] Can Judges Ignore Inadmissible Information? The Difficulty of Deliberately Disregarding (2005) Andrew J. Wistrich, Chris Guthrie, & Jeffrey J. Rachlinski

[39] Blinking on the Bench: How Judges Decide Cases (2007) by Chris Guthrie, Jeffrey J. Rachlinski, & Andrew J. Wistrich

[40] Blinking on the Bench: How Judges Decide Cases (2007) by Chris Guthrie, Jeffrey J. Rachlinski, & Andrew J. Wistrich

[41] Can Judges Make Reliable Numeric Judgments: Distorted Damages and Skewed Sentences (2015) by Jeffrey J. Rachlinski, Andrew J. Wistrich, & Chris Guthrie

[42] Can Judges Make Reliable Numeric Judgments: Distorted Damages and Skewed Sentences (2015) by Jeffrey J. Rachlinski, Andrew J. Wistrich, & Chris Guthrie

[43] Inside the Judicial Mind (2001) by Chris Guthrie, Jeffrey J. Rachlinski, & Andrew J. Wistrich

[44] Inside the Judicial Mind (2001) by Chris Guthrie, Jeffrey J. Rachlinski, & Andrew J. Wistrich

[45] Can Judges Make Reliable Numeric Judgments: Distorted Damages and Skewed Sentences (2015) by Jeffrey J. Rachlinski, Andrew J. Wistrich, & Chris Guthrie; (2006) Playing Dice With Criminal Sentences: The Influence of Irrelevant Anchors on Experts’ Judicial Decision Making (2006) by Birte Englich, Thomas Mussweiler, & Fritz Strack

[46] Playing Dice With Criminal Sentences: The Influence of Irrelevant Anchors on Experts’ Judicial Decision Making (2006) by Birte Englich, Thomas Mussweiler, & Fritz Strack

[47] Sentencing Under Uncertainty: Anchoring Effects in the Courtroom (2001) by Birte Englich & Thomas Mussweiler

[48] Inside the Bankruptcy Judge’s Mind (2006) by Jeffrey J. Rachlinski, Chris Guthrie, & Andrew J. Wistrich

[49] Inside the Bankruptcy Judge’s Mind (2006) by Jeffrey J. Rachlinski, Chris Guthrie, & Andrew J. Wistrich

[50] Can Judges Ignore Inadmissible Information? The Difficulty of Deliberately Disregarding (2005) Andrew J. Wistrich, Chris Guthrie, & Jeffrey J. Rachlinski

![[Image taken from http://blog.kameleoon.com/en/cognitive-biases/]](https://images.squarespace-cdn.com/content/v1/5817bb2746c3c4a605334446/1494294770375-CHGBE5BWNRAIF0QN1US6/image-asset.jpeg)